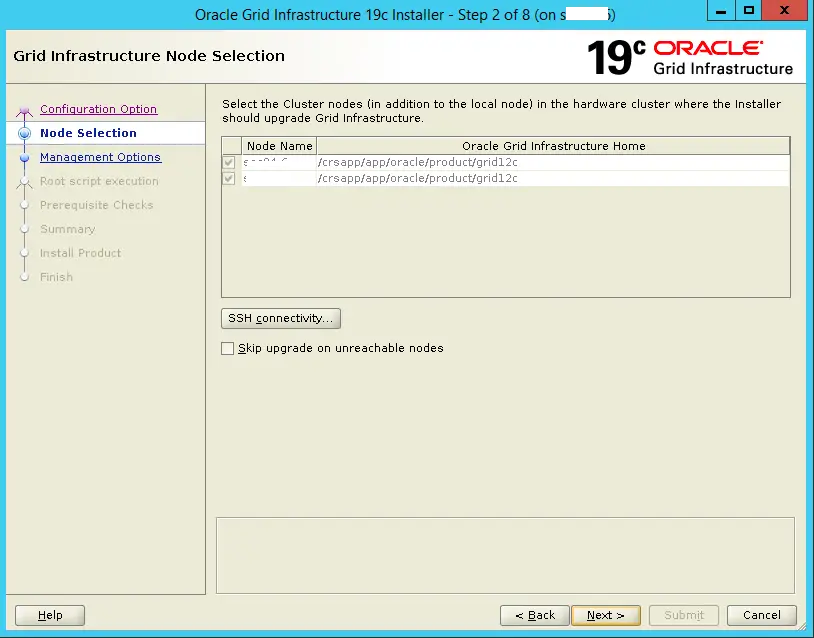

The latest oracle version is oracle 19C . In the below article we will explain the steps for upgrading grid infrastructure to 19c from oracle 12c . The upgrade will be done in rolling mode.

Current configuration – >

No of nodes – 2 node

current version – 12.1.0.2

os platform – Solaris

grid owner – oracle

Check software version :

oracle@node1~$ crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [12.1.0.2.0]

oracle@node2~$ crsctl query crs softwareversion

Oracle Clusterware version on node node1 is [12.1.0.2.0]

PRECHECKS:

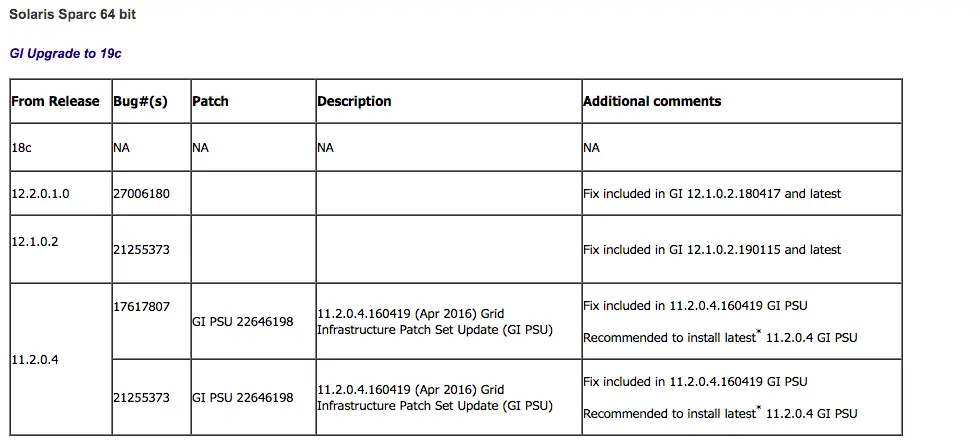

- Apply required patches to your existing 12c grid.

Required patches depends upon the os platform and current patch level. Please refer below metalink to find which patches you will need for your setup.

For our solaris setup, we have already applied JAN2019 BUNDLE PATCH (GI) . So no need to apply any patches.

2. Download the software and unzip on your first node

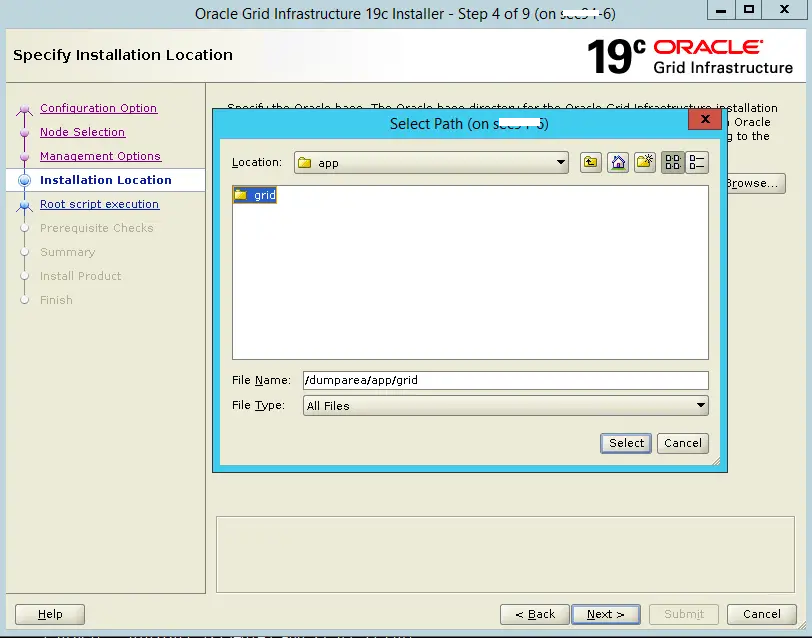

Create new grid home for oracle 19c .

mkdir -p /dumparea/oracle/app/grid12c

unzip the software at this home location .(grid_home is where we unzip the software).

cd /dumparea/oracle/app/grid12c unzip -q /dumparea/SOLARIS.SPARC64_193000_grid_home.zip

3. Run the orachk tool as grid owner ( oracle)

orachk tool will generate a report for recommendation, that need to be taken care before upgrading.

export GRID_HOME=/dumparea/oracle/app/grid19c cd /dumparea/oracle/app/grid19c/suptools/orachk/ ./orachk –u -o pre

Analyze the html report for any recommendations.

4. Run cluvfy as grid owner ( oracle )

cd dumparea/oracle/app/grid19c

syntax – >

./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome -dest_crshome -dest_version 19.0.0.0.0 -fixup -verbose

i.e

./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /drcrs/app/oracle/product/grid12c -dest_crshome dumparea/oracle/app/grid19c -dest_version 19.0.0.0.0 -fixup -verbose

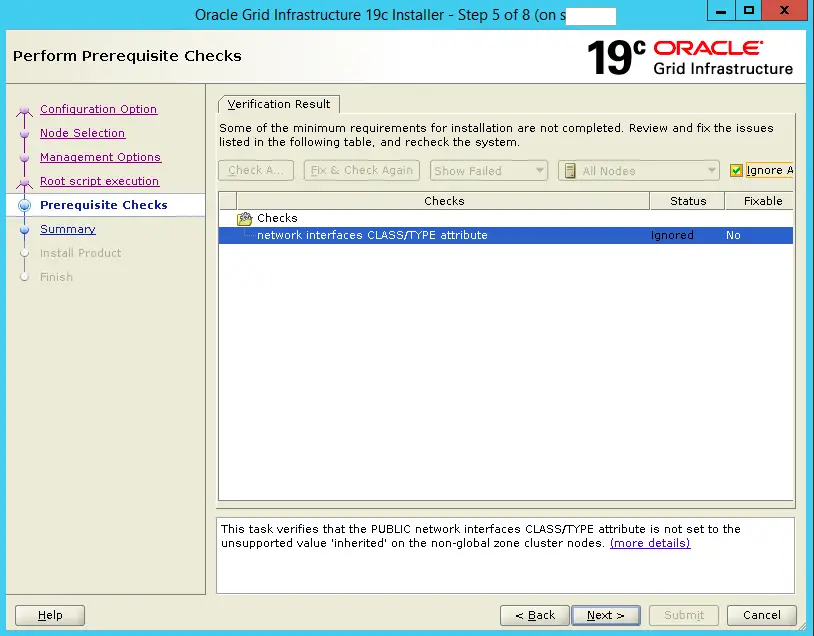

./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /drcrs/app/oracle/product/grid12c -dest_crshome dumparea/oracle/app/grid19c -dest_version 19.0.0.0.0 -fixup -verbose Verifying Physical Memory ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- snode1 254GB (2.66338304E8KB) 8GB (8388608.0KB) passed snode2 254GB (2.66338304E8KB) 8GB (8388608.0KB) passed Verifying Physical Memory ...PASSED Verifying Available Physical Memory ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- snode1 8.5611GB (8977000.0KB) 50MB (51200.0KB) passed snode2 7.3874GB (7746264.0KB) 50MB (51200.0KB) passed Verifying Available Physical Memory ...PASSED Verifying Swap Size ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- snode1 256GB (2.68435448E8KB) 16GB (1.6777216E7KB) passed snode2 256GB (2.68435448E8KB) 16GB (1.6777216E7KB) passed . . . . Verifying network interfaces CLASS/TYPE attribute ...PASSED Verifying loopback network interface address ...PASSED Verifying Privileged group consistency for upgrade ...PASSED Verifying CRS user Consistency for upgrade ...PASSED Verifying Clusterware Version Consistency ... Verifying cluster upgrade state ...PASSED Verifying Clusterware Version Consistency ...PASSED Verifying Check incorrectly sized ASM Disks ...PASSED Verifying Network configuration consistency checks ...PASSED Verifying File system mount options for path GI_HOME ...PASSED Verifying OLR Integrity ...PASSED Verifying Verify that the ASM instance was configured using an existing ASM parameter file. ...PASSED Verifying User Equivalence ...PASSED Verifying IP hostmodel ...PASSED Verifying File system mount options for path /var ...PASSED Verifying Multiuser services check ...PASSED Verifying ASM Filter Driver configuration ...PASSED Pre-check for cluster services setup was successful. CVU operation performed: stage -pre crsinst Date: Aug 27, 2019 9:30:53 AM CVU home: /dumparea/oracle/app/grid19c/ User: oracle

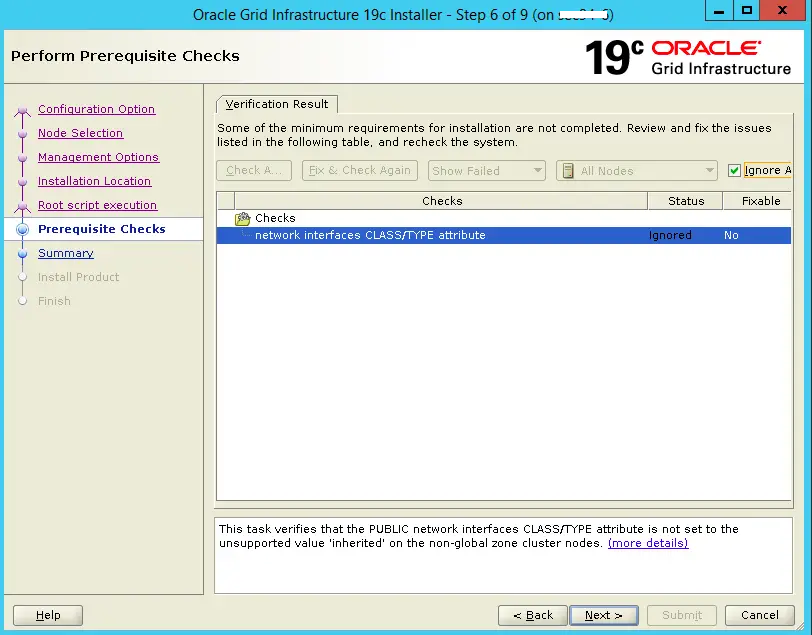

In case any error reported, fix them before proceeding further.

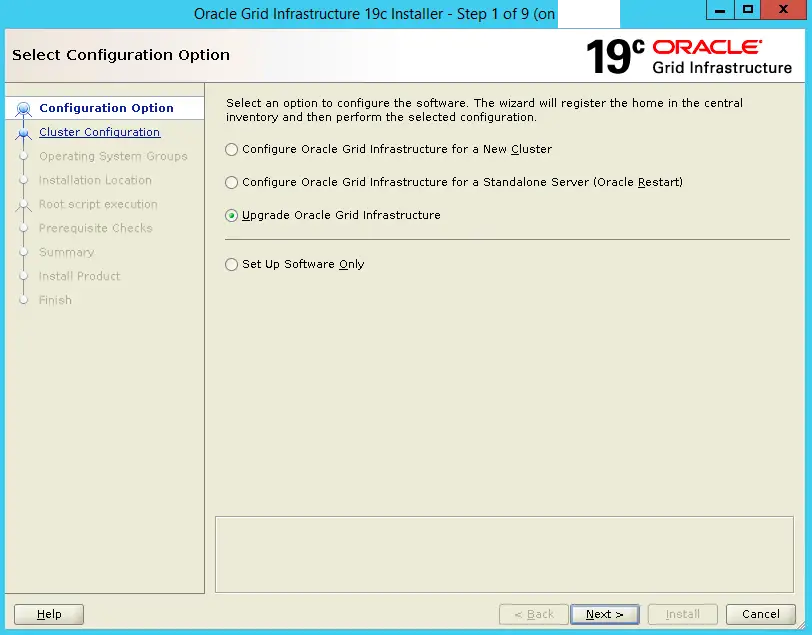

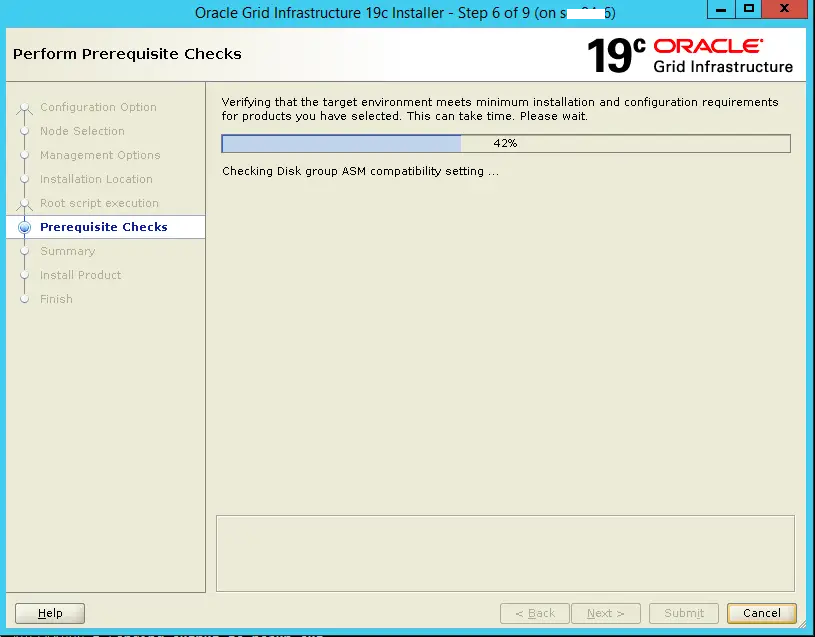

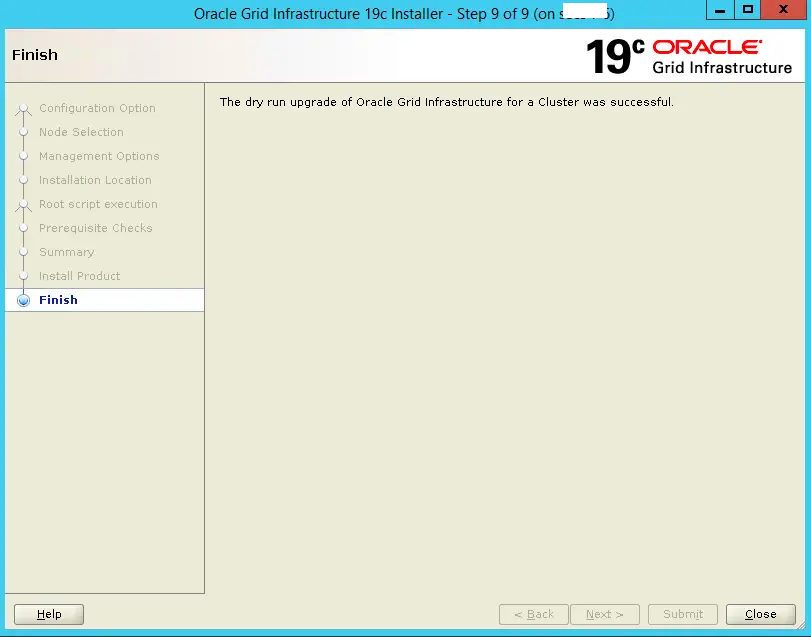

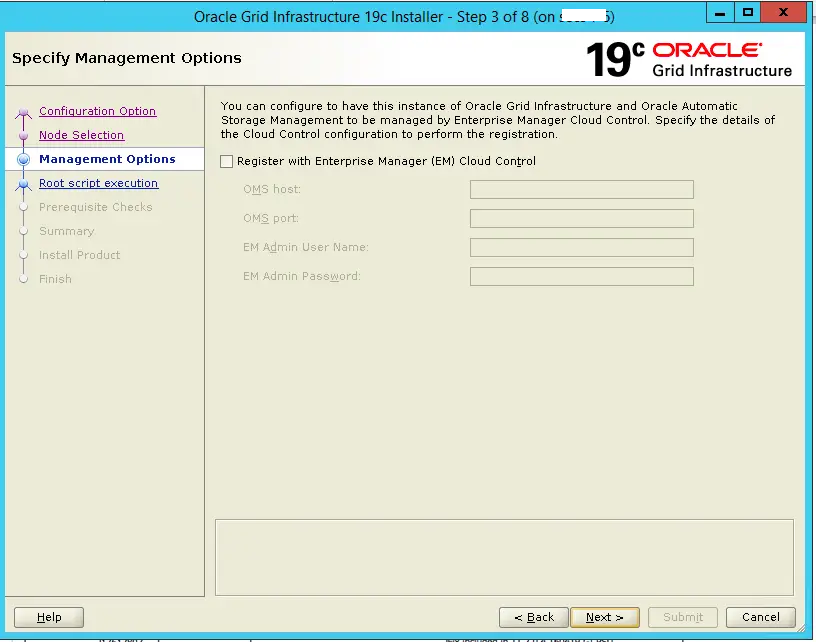

DRY RUN PHASE:( GUI Method)

Dry run phase will not do any changes to the existing grid setup. It will just check the system readiness.

As per oracle note: Dry run does below activities:

- Validates storage and network configuration for the new release

- Checks if the system meets the software and hardware requirements for the new release

- Checks for the patch requirements and apply necessary patches before starting the upgrade

- Writes system configuration issues or errors in the

gridSetupActions<timestamp>.loglog file

NOTE – > Few users commented that , dry run phase restarted their grid . So you are doing this on production, please consider this risk.

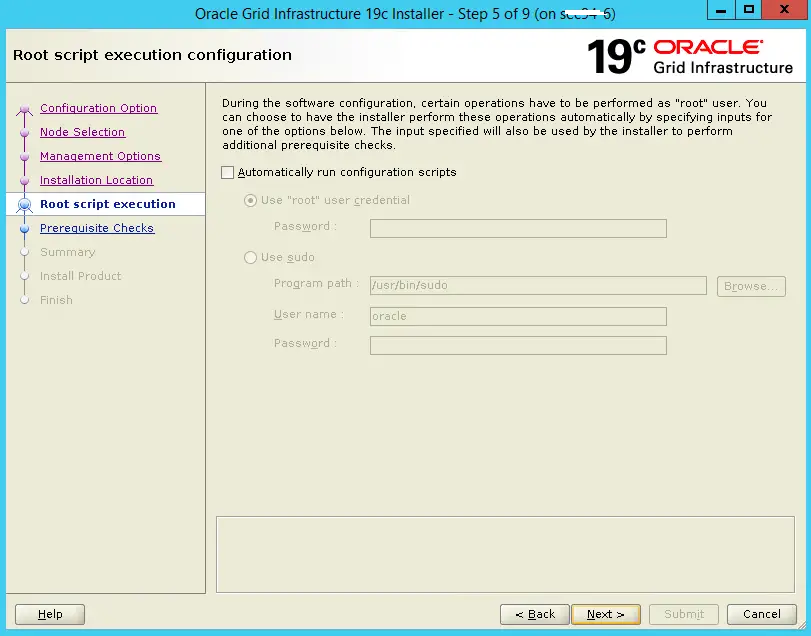

— Run as grid owner ( oracle)

unset ORACLE_BASE

unset ORACLE_HOME

unset ORACLE_SID

cd /dumparea/oracle/app/grid19c

./gridSetup.sh -dryRunForUpgrade

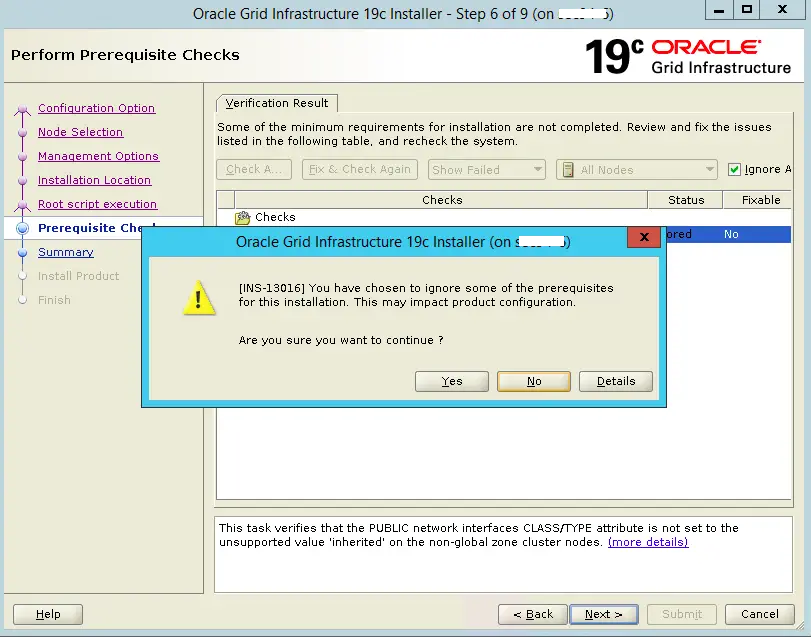

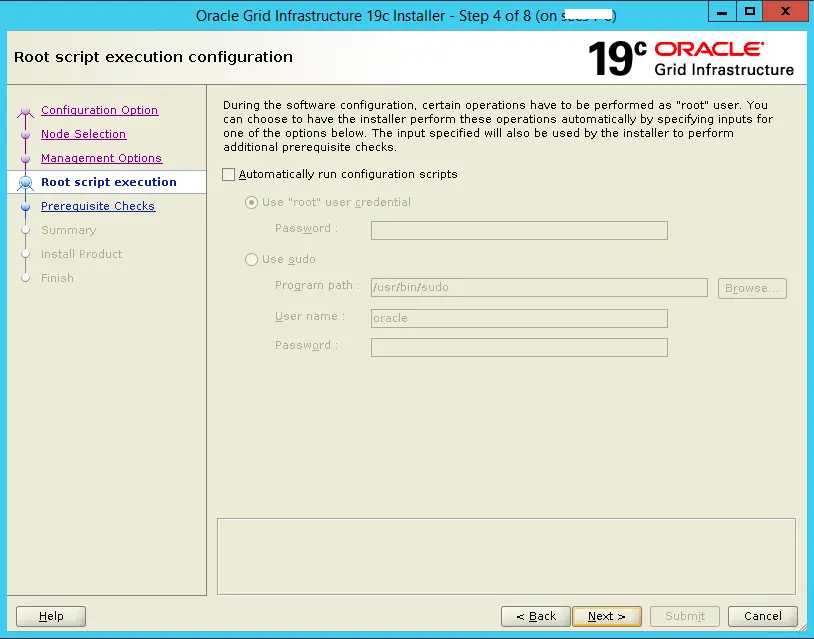

This warning can be ignored.

Run root script only on the local node.

NOTE – Dont run this on remote node. Run only on local node

root@node1:~# /dumparea/oracle/app/grid19c/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /dumparea/oracle/app/grid19c

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]:

Entries will be added to the /var/opt/oracle/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Performing Dry run of the Grid Infrastructure upgrade.

Using configuration parameter file: /dumparea/oracle/app/grid19c/crs/install/crsconfig_params

The log of current session can be found at:

/dumparea/app/grid/crsdata/node1/crsconfig/rootcrs__2019-08-27_10-37-30AM.log

2019/08/27 10:38:07 CLSRSC-464: Starting retrieval of the cluster configuration data

2019/08/27 10:38:20 CLSRSC-729: Checking whether CRS entities are ready for upgrade, cluster upgrade will not be attempted now. This operation may take a few minutes.

2019/08/27 10:42:04 CLSRSC-693: CRS entities validation completed successfully.

The dry run upgrade is successful. Lets proceed with actual upgrade.

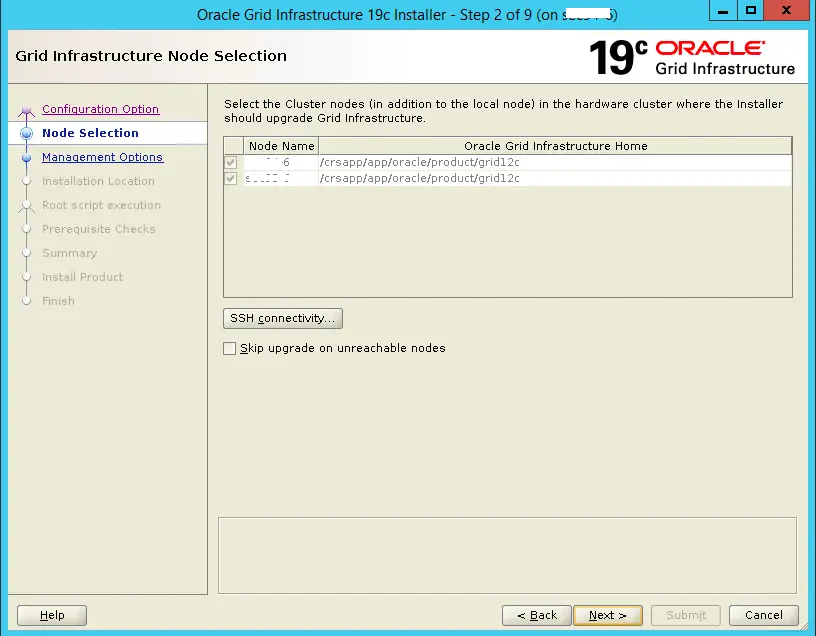

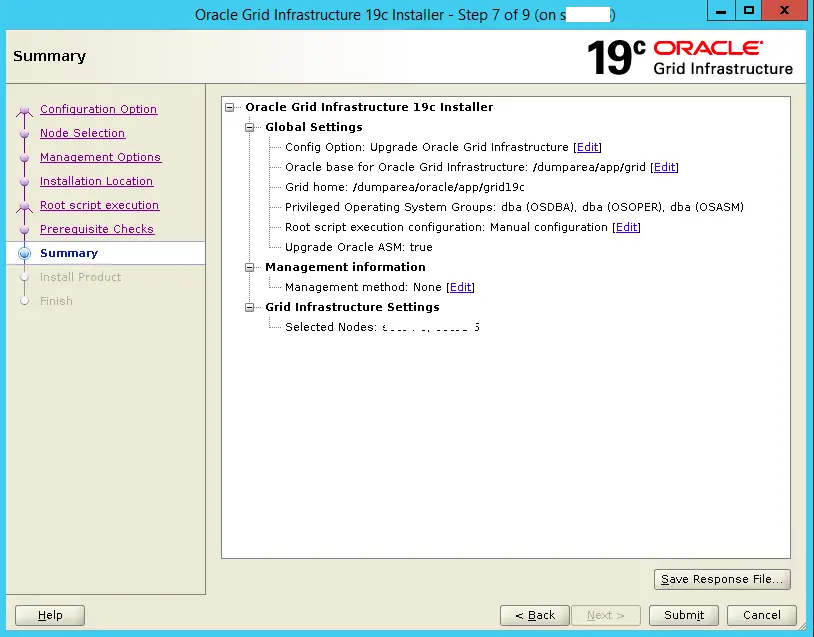

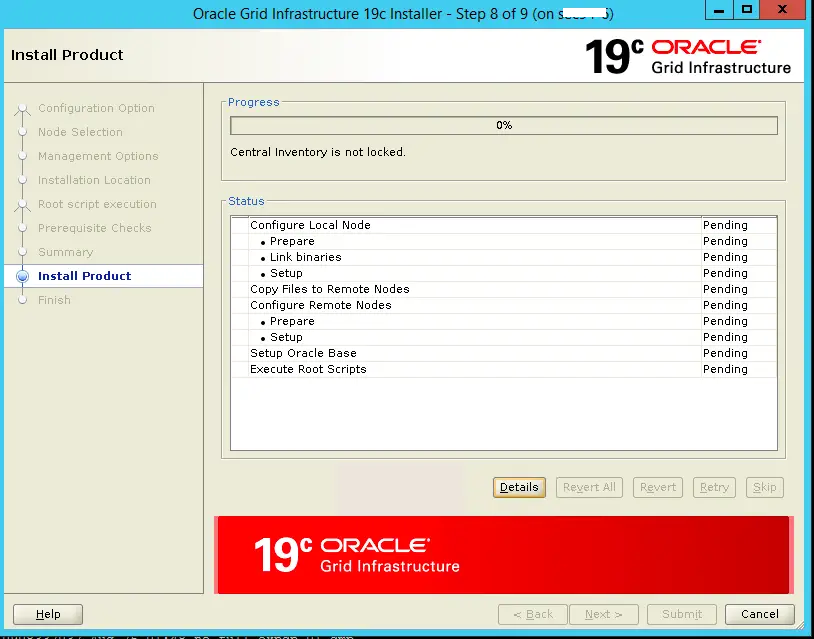

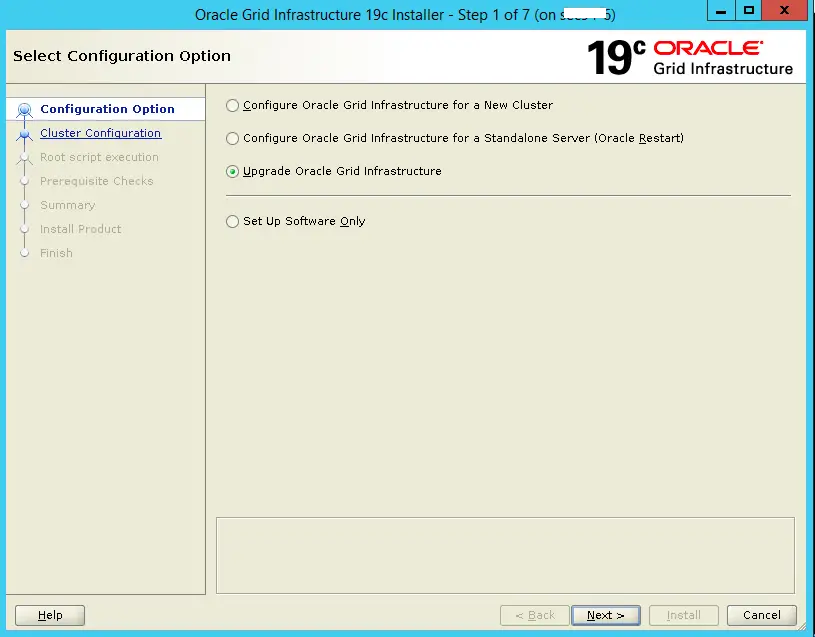

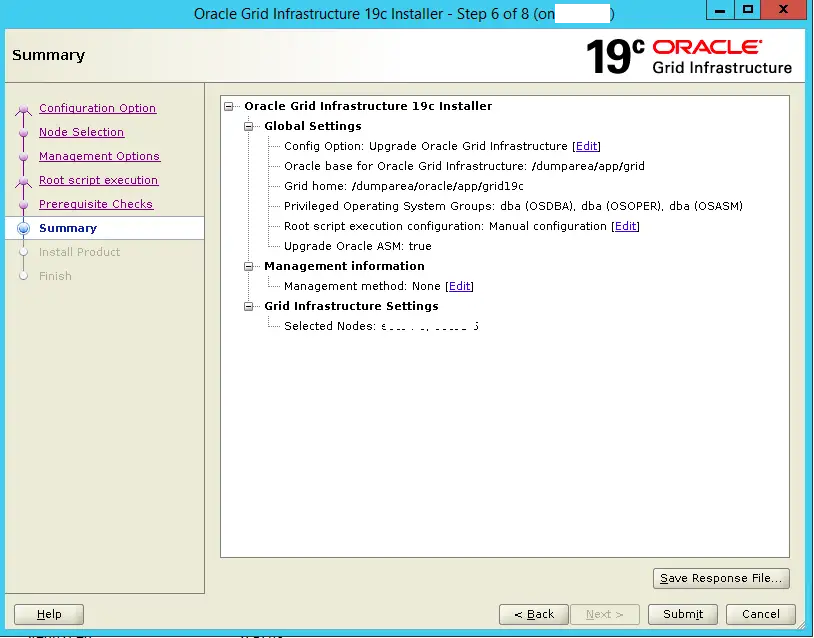

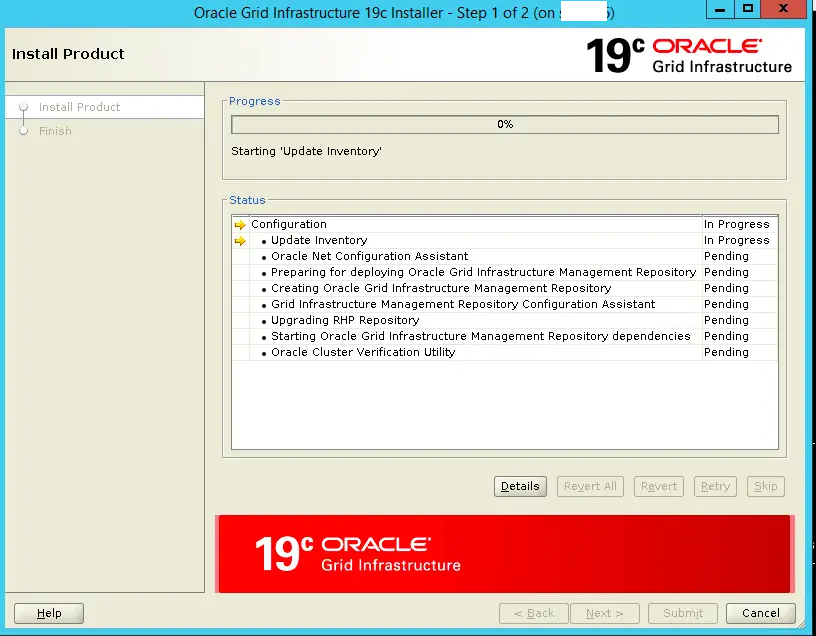

ACTUAL UPGRADE:

Now we will proceed with the actual upgrade in a rolling mode .

— Run as grid owner ( oracle )

unset ORACLE_BASE

unset ORACLE_HOME

unset ORACLE_SID

cd /dumparea/oracle/app/grid19c

./gridSetup.sh

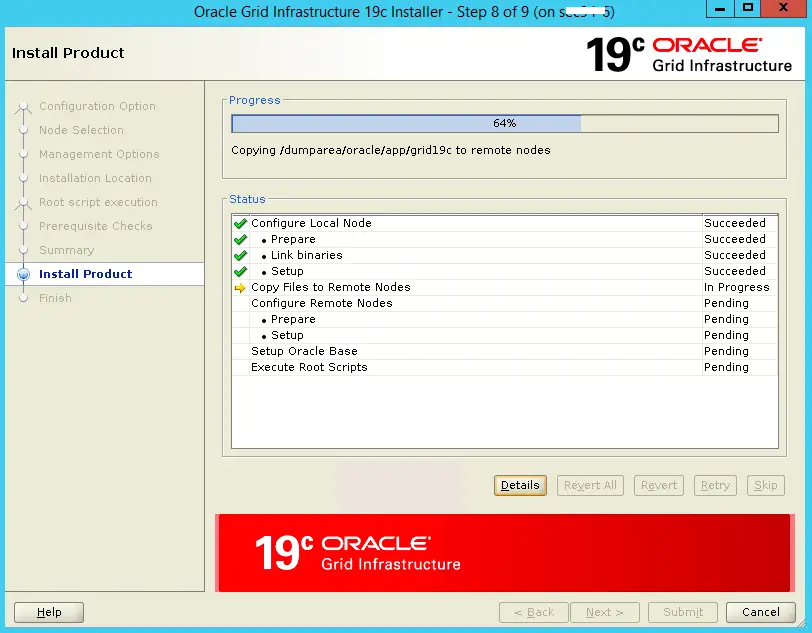

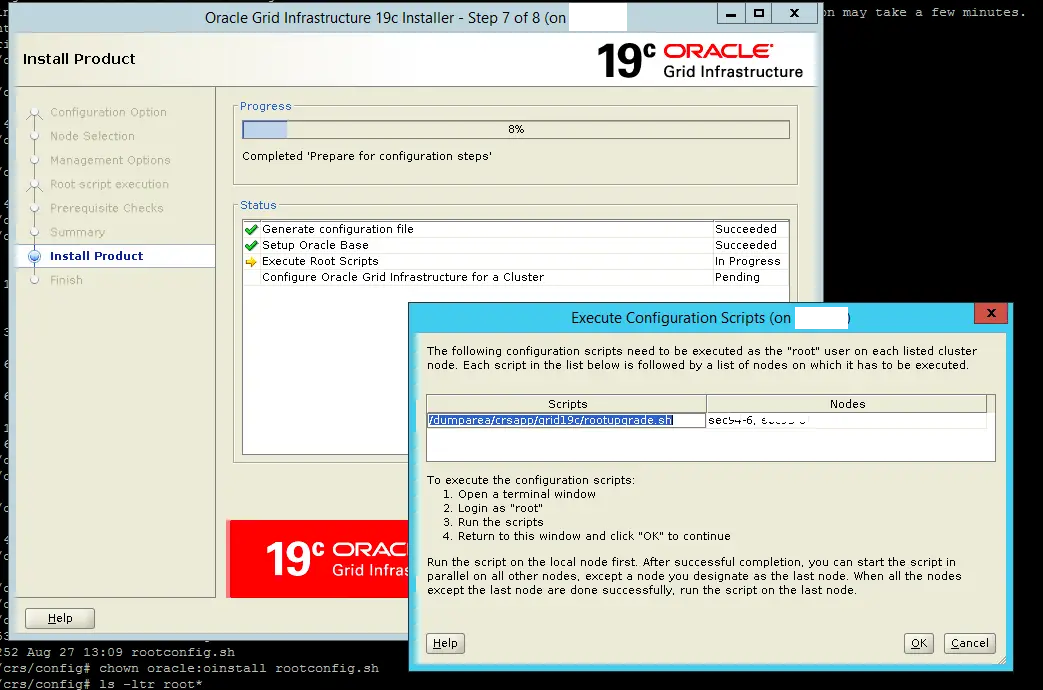

Now run the rootupgrade.sh first on local node .Once it is successful on local node, then proceed on remote node.

Rootupgrade.sh script on node 1:( as root user)

root@node1~# /dumparea/crsapp/grid19c/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /dumparea/crsapp/grid19c

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]:

Entries will be added to the /var/opt/oracle/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /dumparea/crsapp/grid19c/crs/install/crsconfig_params

The log of current session can be found at:

/dumparea/orabase/crsdata/node1/crsconfig/rootcrs_node_1_2019-08-27_02-50-29PM.log

2019/08/27 14:50:46 CLSRSC-595: Executing upgrade step 1 of 18: 'UpgradeTFA'.

2019/08/27 14:50:47 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector.

2019/08/27 14:50:47 CLSRSC-4012: Shutting down Oracle Trace File Analyzer (TFA) Collector.

2019/08/27 14:51:14 CLSRSC-4013: Successfully shut down Oracle Trace File Analyzer (TFA) Collector.

2019/08/27 14:51:14 CLSRSC-595: Executing upgrade step 2 of 18: 'ValidateEnv'.

2019/08/27 14:51:17 CLSRSC-595: Executing upgrade step 3 of 18: 'GetOldConfig'.

2019/08/27 14:51:17 CLSRSC-464: Starting retrieval of the cluster configuration data

2019/08/27 14:51:24 CLSRSC-692: Checking whether CRS entities are ready for upgrade. This operation may take a few minutes.

2019/08/27 14:51:27 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector.

2019/08/27 14:55:10 CLSRSC-693: CRS entities validation completed successfully.

2019/08/27 14:55:24 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed.

2019/08/27 14:55:24 CLSRSC-595: Executing upgrade step 4 of 18: 'GenSiteGUIDs'.

2019/08/27 14:55:26 CLSRSC-595: Executing upgrade step 5 of 18: 'UpgPrechecks'.

2019/08/27 14:55:27 CLSRSC-595: Executing upgrade step 6 of 18: 'SetupOSD'.

2019/08/27 14:55:27 CLSRSC-595: Executing upgrade step 7 of 18: 'PreUpgrade'.

2019/08/27 14:55:28 CLSRSC-595: Executing upgrade step 8 of 18: 'CheckCRSConfig'.

2019/08/27 14:55:32 CLSRSC-595: Executing upgrade step 9 of 18: 'UpgradeOLR'.

2019/08/27 14:55:33 CLSRSC-595: Executing upgrade step 10 of 18: 'ConfigCHMOS'.

2019/08/27 14:55:34 CLSRSC-595: Executing upgrade step 11 of 18: 'UpgradeAFD'.

2019/08/27 14:55:36 CLSRSC-595: Executing upgrade step 12 of 18: 'createOHASD'.

2019/08/27 14:55:38 CLSRSC-595: Executing upgrade step 13 of 18: 'ConfigOHASD'.

2019/08/27 14:55:44 CLSRSC-595: Executing upgrade step 14 of 18: 'InstallACFS'.

2019/08/27 14:55:47 CLSRSC-595: Executing upgrade step 15 of 18: 'InstallKA'.

2019/08/27 14:55:56 CLSRSC-595: Executing upgrade step 16 of 18: 'UpgradeCluster'.

clscfg: EXISTING configuration version 5 detected.

Successfully taken the backup of node specific configuration in OCR.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2019/08/27 15:00:01 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/08/27 15:00:16 CLSRSC-595: Executing upgrade step 17 of 18: 'UpgradeNode'.

2019/08/27 15:00:22 CLSRSC-474: Initiating upgrade of resource types

2019/08/27 15:02:49 CLSRSC-475: Upgrade of resource types successfully initiated.

2019/08/27 15:03:30 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'.

2019/08/27 15:03:47 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

Now proceed with node 2:

Rootupgrade.sh script on node 2:( as root user)

root@node2:~# /dumparea/crsapp/grid19c/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /dumparea/crsapp/grid19c

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]:

Entries will be added to the /var/opt/oracle/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /dumparea/crsapp/grid19c/crs/install/crsconfig_params

The log of current session can be found at:

/dumparea/orabase/crsdata/node2/crsconfig/rootcrs_node2_2019-08-27_03-09-45PM.log

2019/08/27 15:10:03 CLSRSC-595: Executing upgrade step 1 of 18: 'UpgradeTFA'.

2019/08/27 15:10:03 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector.

2019/08/27 15:10:04 CLSRSC-595: Executing upgrade step 2 of 18: 'ValidateEnv'.

2019/08/27 15:10:06 CLSRSC-595: Executing upgrade step 3 of 18: 'GetOldConfig'.

2019/08/27 15:10:06 CLSRSC-464: Starting retrieval of the cluster configuration data

2019/08/27 15:14:37 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector.

2019/08/27 15:25:26 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed.

2019/08/27 15:25:26 CLSRSC-595: Executing upgrade step 4 of 18: 'GenSiteGUIDs'.

2019/08/27 15:25:26 CLSRSC-595: Executing upgrade step 5 of 18: 'UpgPrechecks'.

2019/08/27 15:25:28 CLSRSC-363: User ignored prerequisites during installation

2019/08/27 15:25:31 CLSRSC-595: Executing upgrade step 6 of 18: 'SetupOSD'.

2019/08/27 15:25:31 CLSRSC-595: Executing upgrade step 7 of 18: 'PreUpgrade'.

ASM configuration upgraded in local node successfully.

2019/08/27 15:25:49 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack

2019/08/27 15:25:50 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed.

2019/08/27 15:25:54 CLSRSC-595: Executing upgrade step 8 of 18: 'CheckCRSConfig'.

2019/08/27 15:25:59 CLSRSC-595: Executing upgrade step 9 of 18: 'UpgradeOLR'.

2019/08/27 15:26:06 CLSRSC-595: Executing upgrade step 10 of 18: 'ConfigCHMOS'.

2019/08/27 15:26:07 CLSRSC-595: Executing upgrade step 11 of 18: 'UpgradeAFD'.

2019/08/27 15:26:10 CLSRSC-595: Executing upgrade step 12 of 18: 'createOHASD'.

2019/08/27 15:26:13 CLSRSC-595: Executing upgrade step 13 of 18: 'ConfigOHASD'.

2019/08/27 15:26:14 CLSRSC-329: Replacing Clusterware entries in file '/etc/inittab'

2019/08/27 15:26:59 CLSRSC-595: Executing upgrade step 14 of 18: 'InstallACFS'.

2019/08/27 15:27:53 CLSRSC-595: Executing upgrade step 15 of 18: 'InstallKA'.

2019/08/27 15:27:56 CLSRSC-595: Executing upgrade step 16 of 18: 'UpgradeCluster'.

2019/08/27 15:29:58 CLSRSC-343: Successfully started Oracle Clusterware stack

clscfg: EXISTING configuration version 19 detected.

Successfully taken the backup of node specific configuration in OCR.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2019/08/27 15:30:24 CLSRSC-595: Executing upgrade step 17 of 18: 'UpgradeNode'.

Start upgrade invoked..

Upgrading CRS managed objects

Upgrading 50 CRS resources

Completed upgrading CRS resources

Upgrading 69 CRS types

Completed upgrading CRS types

Upgrading 11 CRS server pools

Completed upgrading CRS server pools

Upgrading 1 CRS server categories

Completed upgrading CRS server categories

Upgrading 2 old servers

Completed upgrading servers

CRS upgrade has completed.

2019/08/27 15:30:37 CLSRSC-478: Setting Oracle Clusterware active version on the last node to be upgraded

2019/08/27 15:30:38 CLSRSC-482: Running command: '/dumparea/crsapp/grid19c/bin/crsctl set crs activeversion'

Started to upgrade the active version of Oracle Clusterware. This operation may take a few minutes.

Started to upgrade CSS.

CSS was successfully upgraded.

Started to upgrade Oracle ASM.

Oracle ASM was successfully upgraded.

Started to upgrade CRS.

CRS was successfully upgraded.

Started to upgrade Oracle ACFS.

Oracle ACFS was successfully upgraded.

Successfully upgraded the active version of Oracle Clusterware.

Oracle Clusterware active version was successfully set to 19.0.0.0.0.

2019/08/27 15:31:49 CLSRSC-479: Successfully set Oracle Clusterware active version

2019/08/27 15:36:13 CLSRSC-476: Finishing upgrade of resource types

2019/08/27 15:36:58 CLSRSC-477: Successfully completed upgrade of resource types

2019/08/27 15:39:08 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'.

Successfully updated XAG resources.

2019/08/27 15:40:01 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

You have new mail in /var/mail/root

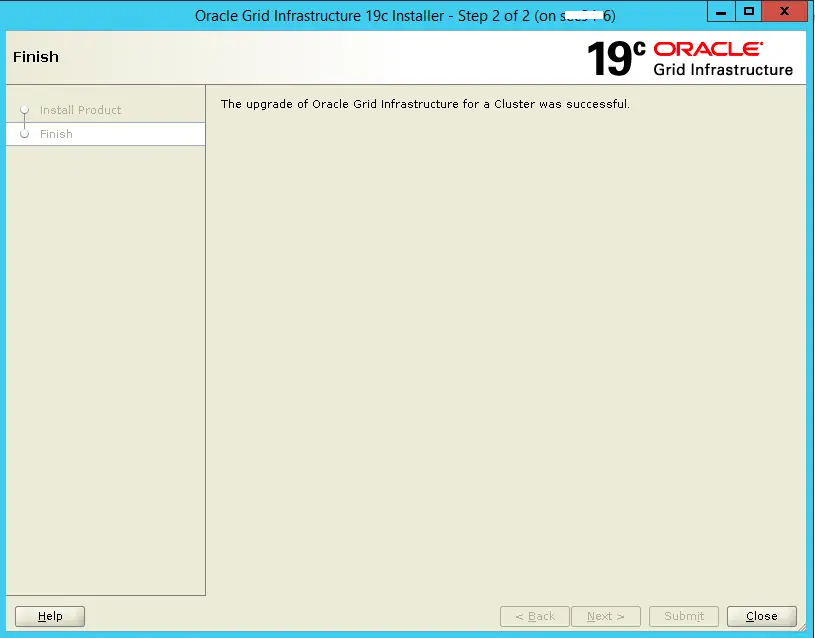

Once rootupgade.sh script executio completed on both nodes. Proceed to resume.

We have successful upgraded the grid to 19c version.

POST CHECK :

oracle@node1:~$ crsctl query crs activeversion Oracle Clusterware active version on the cluster is [19.0.0.0.0] oracle@node1:~$ crsctl query crs softwareversion Oracle Clusterware version on node [node1] is [19.0.0.0.0] oracle@node2:~$ crsctl query crs activeversion Oracle Clusterware active version on the cluster is [19.0.0.0.0] oracle@node2:~$ crsctl query crs softwareversion Oracle Clusterware version on node [node2] is [19.0.0.0.0] crsctl stat res -t crsctl check crs crsctl stat res -t -init

TROUBLESHOOTING:

1. If rootupgrade.sh script failed on local node:

In case rootupgrade.sh script failed on local node either due to any error or system got rebooted during that time, Then analyze the error and fix it . Once fixed, resume the ugprade with below step.

–run rootupgrade.sh script again on node 1:

cd /dumparea/crsapp/grid19c

/dumparea/crsapp/grid19c/rootupgrade.sh

–run rootupgrade.sh script again on node 2

cd /dumparea/crsapp/grid19c

/dumparea/crsapp/grid19c/rootupgrade.sh

Once rootupgrade.sh script is successful on both the node. We can start the gridsetup with response file again to complete the rest of the upgrade.

Response file can be found at $GRID_HOME_19C/install/response .

run gridsetup.sh script with the resonse file :

./gridSetup.sh -executeConfigTools -responseFile /dumparea/crsapp/grid19c/install/response/gridinstall_5_07_2019.rsp

This will skip the already executed tasks and complete the pending configuration.

2. If rootupgrade script failed on remote node :

At this stage , rootupgrade.sh is successful on local node, but failed on remote node. in that case, once issue is resolved on remote node, resume the upgrade by running rootupgrade.sh again on remote node.

— on node 2

cd /dumparea/crsapp/grid19c

/dumparea/crsapp/grid19c/rootupgrade.sh

If the gridsetup.sh GUI is not available, then we can start the gridsetup by using the response file.

./gridSetup.sh -executeConfigTools -responseFile /dumparea/crsapp/grid19c/install/response/gridinstall_5_07_2019.rsp

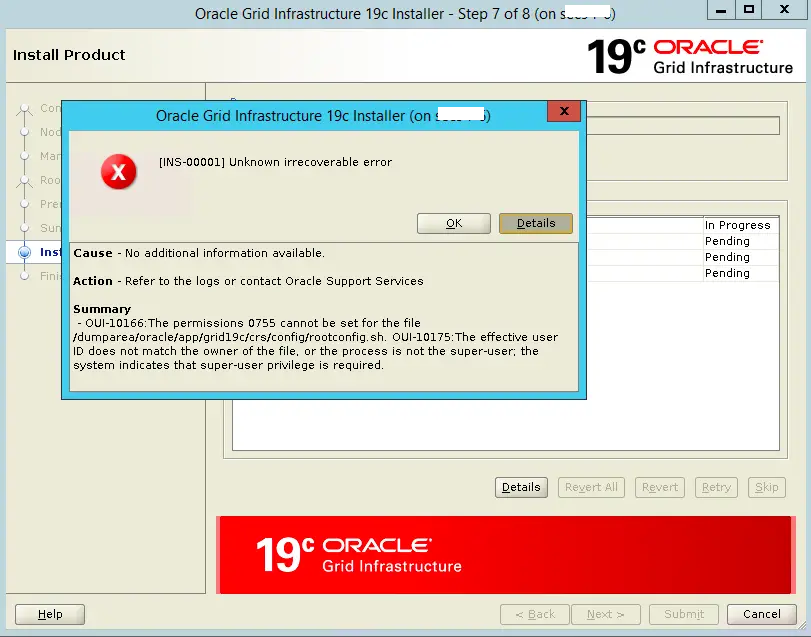

3. OUI-10166: The permission 0755 cannot be set for the file rootconfig.sh .

While starting the actual upgrade, If you are seeing the error. as

OUI-10166: The permissions 0755 cannot be set for the file $GRID_HOME/crs/config/rootconfig.sh. OUI-10175: The effective user ID does not match the owner of the file or the process is not the user user.

SOLUTION:

Check the owner of the file. $GRID_HOME/crs/config/rootconfig.sh . If it is owned by root , then change it to oracle and rerun the gridsetup.sh script.

chown oracle:oinstall rootconfig.sh

4. PRVF-5311 : File “/tmp/InstallActions error:

Cause – Either passwordless SSH connectivity is not setup between specified node(s) or they are not reachable. Refer to the logs for more details. Action – Refer to the logs for more details or contact Oracle Support Services. More Details

PRVF-5311 : File “/tmp/GridSetupActions2022-11-06_03-12-09AM/.getFileInfo150997.out” either does not exist or is not accessible on node.

If at pre-requisite stage this error is coming, then you can try below solution.

Do below changes to scp. (

# Rename the original scp. ( find where is the scp executable file present)

mv /usr/bin/scp /usr/bin/scp.orig

# Create a new file .

vi /usr/bin/scp

# Add the below line to the new created file .

/usr/bin/scp.orig -T $*

# Change the file permission to 555.

chmod 555 /usr/bin/scp

RETRY THE UPGRADE PROCESS .

###Once upgrade is done , you can revert to the original

mv /usr/bin/scp.orig /usr/bin/scp

SEE ALSO:

- Useful Srvctl Commands

- Useful CRSCTL Commands

- Useful Gather Statistics Commands In Oracle

- Useful ADRCI Commands In Oracle

- Useful DGMGRL Commands In Oracle Dataguard

- Useful database monitoring queries

- Useful Flashback Related Commands

- Useful scheduler job Related Commands

- Useful Oracle Auditing Related Commands

- Useful RMAN Commands

- Useful TFACTL Commands

You wrote

> Dry run phase will not do any changes to the existing grid setup. It will just check the system readiness.

but you forgot to add important note:

… “and restarting GI services” on local node – in my opinion it very important.

Dear,

Dry run will not restart the GI services.

I also hoped so, but it was brutally verified by the real situation on the running system 🙁

You mean , after dryrun upgrade, your crs was down??? When i did on my test env, my crs was up and running .

I tried using this note and dry run brought down db, asm and CRS on local node when I ran root script. Same thing happened for remote node also so you can add, dry run will restart GI services and make an entry in central Inventory.

Vivek

Hi Grzegorz,

Yes it will restart GI system during dry run due to a documented bug, i verified same with oracle support as well. To avoid service restart during dryrun you should apply RU6 i guess(not exactly sure). I applied RU 10 and did dryrun, the services didnt stop. You can apply RU in grid before installation by below steps.

$ ./gridsetup.sh -applyRU

After patching you will still face another bug which will be patch cannot recognize. For that you have to apply another one off patch. I will update patch details soon.

If you do above, you will avoid service restart during dryrun.

Thanks

Hi – I am trying to upgrade 12.1.0 to 19.3 GI in RAC and i am getting below “warning” during cluvfy pre run – is it ignorable or need to fix before start of upgrade.(Pre-check for cluster services setup was successful.)

Pre-check for cluster services setup was successful.

Verifying resolv.conf Integrity …WARNING

PRVG-2016 : File “/etc/resolv.conf” on node “XXXXXXXXXX” has both ‘search’ and ‘domain’ entries. (get for both node).

and couple of PRVG-11250 as it says it require to run as root.

please clarify.

Warning can be ignored. however if it is production, better to crosscheck all the warning and make it warning free.

Post successful upgrade from 12.2 to 19.3, whenever the cold database backup runs after database started using “srvctl start database -d ‘ we are getting TNS error – ora-12514. What could be the problem? It is getting resolved only after isuing ‘srvctl update database everytime, even we have removed the srvctl services and added it back but no luck!

Can you share the output of srvctl config database -d DB_NAME??

Every time the run the file name is changing,

As per the log location : “/tmp/GridSetupActions2020-11-06_04-44-39AM/scdcb0000160.getFileInfo52467.out”

but we do not see any such file in the location, that means the file is not existing,

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM # ls

CVU_19.0.0.0.0_oracle

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM # pwd

/tmp/GridSetupActions2020-11-06_04-44-39AM

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM #

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM #

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM # ls -lrt

total 4

drwxr-x— 3 oracle oinstall 4096 Nov 6 04:51 CVU_19.0.0.0.0_oracle

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM # ls -lrt | grep scdcb*

SCDCB0000160:/tmp/GridSetupActions2020-11-06_04-44-39AM #

how to proceed further.

while running dry run the passwordless setup or test fails saying the below error.

setup completed successfully but when tried to TEST it gives below error.

Cause – Either passwordless SSH connectivity is not setup between specified node(s) or they are not reachable. Refer to the logs for more details. Action – Refer to the logs for more details or contact Oracle Support Services. More Details

PRVF-5311 : File “/tmp/GridSetupActions2020-11-06_03-12-09AM/scdcb0000160.getFileInfo150997.out” either does not exist or is not accessible on node “scdcb0000160”.

Make sure ssh passwordless connectivity is enabled between the nodes ,before proceeding.

Any luck on this issue “PRVF-5311” ?

Hi Velu,

For PRVF-5311 error try below one.

Do below changes to scp. (

# Rename the original scp. ( find where is the scp executable file present)

mv /usr/bin/scp /usr/bin/scp.orig

# Create a new file .

vi /usr/bin/scp

# Add the below line to the new created file .

/usr/bin/scp.orig -T $*

# Change the file permission.

chmod 555 /usr/bin/scp

Once upgrade is done , you can revert to the original

mv /usr/bin/scp.orig /usr/bin/scp

Could you please provide similar upgrade document for window 64bit environment also.

Hi Komal,

We dont have a windows RAC environment to test this. .

Thank you for reply. Hard luck!!!

do we need down time for Grid upgrade from 12.1 to 19c ?

Yes you need downtime.

We still have option of rolling GI upgrade and we can do that without actual business downtime.

This post is only with Grid upgrade. What about the Database upgrade to 19c?

Please share any links if present

Please check below link.

https://dbaclass.com/article/steps-for-upgrading-oracle-database-to-19c/

Hi. Thanks a lot for such detailed presentation. But, we have our Oracle 13.4 Cloud Control Monitoring our Exadata machines and they have the current 12.2 GI Home registered. After the GI Home Upgrade, do we have to manually discover the new GI Home and all the services from the new 19c GI Home in the Oracle 13c Cloud Control or it will get modified automatically? We need this information urgently.