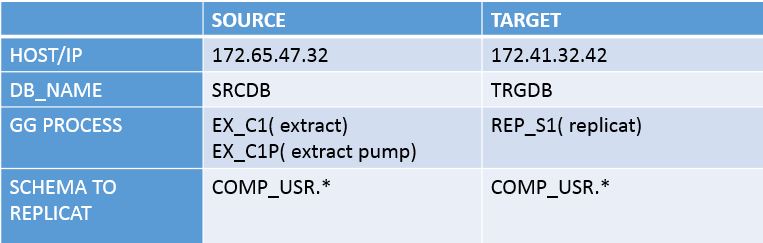

In this tutorial, we will explain how to do schema replication in goldengate. Schema replication means, replicating all the tables of a schema.

1. add supplemental for all tables of a schema [ SOURCE ]

add trandata <schema_name>.*

GGSCI > dblogin userid ggate_user, password ggate_user Successfully logged into database. GGSCI > ADD TRANDATA COMP_USR.* Logging of supplemental redo data enabled for table COMP_USR.TAB1. TRANDATA for scheduling columns has been added on table 'COMP_USR.TAB1'. TRANDATA for instantiation CSN has been added on table 'COMP_USR.TAB1'. Logging of supplemental redo data enabled for table COMP_USR.TAB2. TRANDATA for scheduling columns has been added on table 'COMP_USR.TAB2'. TRANDATA for instantiation CSN has been added on table 'COMP_USR.TAB2'. Logging of supplemental redo data enabled for table COMP_USR.TAB3. TRANDATA for scheduling columns has been added on table 'COMP_USR.TAB3'. TRANDATA for instantiation CSN has been added on table 'COMP_USR.TAB3'.

2. Prepare extract file [ SOURCE ]

ggsci> edit params EX_C1 EXTRACT EX_C1 SETENV (ORACLE_SID="SRCDB") SETENV (ORACLE_HOME="/oracle/app/oracle/product/12.1.0.2/dbhome_1") userid ggate_user, password ggate_user FETCHOPTIONS FETCHPKUPDATECOLS, USESNAPSHOT, USELATESTVERSION DBOPTIONS ALLOWUNUSEDCOLUMN CACHEMGR CACHESIZE 500M DISCARDFILE /ggate_user/DISCARD/CRM_B2B1.dsc, APPEND, MEGABYTES 100 EXTTRAIL /ggate_user/TRAILFILE/S2 WARNLONGTRANS 6H, CHECKINTERVAL 1H TABLE COMP_USR.*;

3. Prepare extract pump parameter file [ SOURCE ]

ggsci>edit params EX_C1P EXTRACT EX_C1P userid ggate_user, password ggate_user SETENV (ORACLE_HOME="/oracle/app/oracle/product/12.1.0.2/dbhome_1") RMTHOST 172.41.32.42, MGRPORT 7809 RMTTRAIL /ggate/install/datacapture/T2 DBOPTIONS ALLOWUNUSEDCOLUMN TABLE COMP_USR.*;

4. Add extract process [SOURCE]

add extract EX_C1,tranlog,begin now EXTRACT added.

5. Add the extract trail [SOURCE]

GGSCI > add exttrail /ggate_user/TRAILFILE/S2,extract EX_C1 EXTTRAIL added.

6. Add the extract pump process [SOURCE]

GGSCI > add extract EX_C1P, exttrailsource /ggate_user/TRAILFILE/S2 EXTRACT added.

7. Add remote trail [SOURCE]

GGSCI > add rmttrail /ggate/install/datacapture/T2,extract EX_C1P RMTTRAIL added.

8. Prepare replicat file [ TARGET ]

Here we have to add HANDLECOLLISION parameter to resolve conflicts for the transactions during initial loading. We will remove this parameter once the lag is cleared after initial loading.

As per Oracle DOC:

Use the HANDLECOLLISIONS and NOHANDLECOLLISIONS parameters to control whether or not Replicat tries to resolve duplicate-record and missing-record errors when applying SQL on the target. These errors, called collisions, occur during an initial load, when data from source tables is being loaded to target tables while Oracle GoldenGate is replicating transactional changes that are being made to those tables. When Oracle GoldenGate applies the replicated changes after the load is finished, HANDLECOLLISIONS provides Replicat with error-handling logic for these collisions.

ggsci> edit params REP_S1 REPLICAT REP_S1 SETENV (ORACLE_HOME = "/u01/app/oracle/product/12.1.0/db_1") SETENV (ORACLE_SID = "TRGDB") USERID ggate_user, PASSWORD ggate_user DBOPTIONS SUPPRESSTRIGGERS,DEFERREFCONST ASSUMETARGETDEFS HANDLECOLLISIONS DISCARDFILE /ggate/install/datacapture/discardcrm.dsc,append MAP COMP_USR.*,TARGET COMP_USR.*;

9. add the replicat process [ TARGET ]

GGSCI > add replicat REP_S1, exttrail /ggate/install/datacapture/T2 REPLICAT added.

10.Start the extract and pump process [ SOURCE ]

START EX_C1 START EX_C1P GGSCI > info all Program Status Group Lag at Chkpt Time Since Chkpt MANAGER RUNNING EXTRACT RUNNING EX_C1 00:00:00 00:00:03 EXTRACT RUNNING EX_C1P 00:00:00 00:02:34

11. Initial loading:

Now export this schema using expdp in the source database and import in the target database.

--- SOURCE DB expdp dumpfile=initload.dmp logfile=initload.log directory=DIRT schemas=COMP_USR Username: / as sysdba Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, OLAP, Data Mining and Real Application Testing options FLASHBACK automatically enabled to preserve database integrity. Starting "SYS"."SYS_EXPORT_TABLE_02": /******** AS SYSDBA dumpfile=initload.dmp logfile=initload.log directory=DIRT schemas=COMP_USR Estimate in progress using BLOCKS method... Processing object type TABLE_EXPORT/TABLE/TABLE_DATA Total estimation using BLOCKS method: 16 MB Processing object type TABLE_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS . . exported "COMP_USR"."TAB1" 3.473 MB 90323 rows . . exported "COMP_USR"."TAB2" 3.473 MB 90323 rows . . exported "COMP_USR"."TAB3" 3.473 MB 90323 rows Master table "SYS"."SYS_EXPORT_TABLE_02" successfully loaded/unloaded ****************************************************************************** Dump file set for SYS.SYS_EXPORT_TABLE_02 is: /archive/dump/initload.dmp -- TRANSFER THE DUMP TO TARGET SERVER: scp oracle@**********:/archive/dump/initload.dmp . -- -IMPORT IN TARGET DATABASE: impdp dumpfile=initload.dmp logfile=imp_initialload.log directory=FULL_REFRESH Import: Release 12.1.0.2.0 - Production on Sun Jul 23 12:01:58 2017 Copyright (c) 1982, 2014, Oracle and/or its affiliates. All rights reserved. Username: / as sysdba Connected to: Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production With the Partitioning, OLAP, Advanced Analytics and Real Application Testing options Master table "SYS"."SYS_IMPORT_FULL_04" successfully loaded/unloaded import done in AL32UTF8 character set and AL16UTF16 NCHAR character set export done in AL32UTF8 character set and UTF8 NCHAR character set WARNING: possible data loss in character set conversions Starting "SYS"."SYS_IMPORT_FULL_04": /******** AS SYSDBA dumpfile=initload.dmp logfile=imp_initialload.log directory=FULL_REFRESH Processing object type TABLE_EXPORT/TABLE/PROCACT_INSTANCE Processing object type TABLE_EXPORT/TABLE/TABLE Processing object type TABLE_EXPORT/TABLE/TABLE_DATA . . imported "COMP_USR"."TAB1" 3.473 MB 90323 rows . . imported "COMP_USR"."TAB2" 3.473 MB 90323 rows . . imported "COMP_USR"."TAB3" 3.473 MB 90323 rows Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT

12. Start the replicat: [ SOURCE ]

GGSCI > start REP_S1 Sending START request to MANAGER ... REPLICAT REP_S1 starting

Use LAG command to monitor the lag at replicat.

GGSCI > lag replicat REP_S1 2017-07-24 15:33:12 INFO OGG-14054 Lag from heartbeat table requires DBLOGIN. Sending GETLAG request to REPLICAT REPR1 ... Last record lag 6 seconds. GGSCI > ! 2017-07-24 15:33:12 INFO OGG-14054 Lag from heartbeat table requires DBLOGIN. Sending GETLAG request to REPLICAT REP_S1 ... Last record lag 6 seconds. At EOF, no more records to process. -------- >>>>>>>>> This indicates lag is zero.

As lag is zero now, Remove the handlescollision parameter and restart the replicat.

13. Remove handlecollison parameter and restart replicat[ TARGET ]

REPLICAT REP_S1 SETENV (ORACLE_HOME = "/u01/app/oracle/product/12.1.0/db_1") SETENV (ORACLE_SID = "TRGDB") USERID ggate_user, PASSWORD ggate_user DBOPTIONS SUPPRESSTRIGGERS,DEFERREFCONST ASSUMETARGETDEFS --HANDLECOLLISIONS DISCARDFILE /ggate/install/datacapture/discardcrm.dsc,append MAP COMP_USR.*,TARGET COMP_USR.*;

ggsci>stop REP_S1 ggsci>start REP_S1

goldengate Schema replication has been completed.

what is the difference between REP_S1 and REPR1

add replicat REPR1, exttrail /ggate/install/datacapture/T2

Dear,

That is a mistake, I have corrected. It should be REP_S1 only.

GGSCI > add replicat REP_S1, exttrail /ggate/install/datacapture/T2

Thanks for reporting. this.

add replicat REP_S1, exttrail /u01/app/oracle/product/TARGETGG/T2

ERROR: No checkpoint table specified for ADD REPLICAT.

please check this.

https://dbaclass.com/article/error-no-checkpoint-table-specified-for-add-replicat/

do i need to create check point each table becuase i have 1000 table

my requirement is to create one more database same as prod want to make active active

No , one checkpoint table for the target db where replicat is running .

How to do step 11 Initial loading when Migating database from Sql Server 2016 to MySql 8.0, as Source will be having data types like FLOAT, TINYINT, BIGINT etc.,

Well explained Thanks